I've learned about the uncanny valley of voices

If you have ever worked or played around with AI generated audio/voices before, one thing you may have noticed is how unnatural they sound compared to human-to-human communication. It's not easy to pinpoint the exact reason why it sounds so unnatural, but if you compare this snippet that I made using one of the many text-to-speech generators out there

with Samantha from the movie Her

You can clearly hear the difference. To me, AI generated voices sit in the uncanny valley of sound, almost human yet just unnatural enough to be somewhat unsettling.

I've learned of the concept of "voice presence", or as Sesame puts it: "the magical quality that makes spoken interactions feel real, understood, and valued".

"Even though recent models produce highly human-like speech, they struggle with the one-to-many problem: there are countless valid ways to speak a sentence, but only some fit a given setting. Without additional context—including tone, rhythm, and history of the conversation—models lack the information to choose the best option. Capturing these nuances requires reasoning across multiple aspects of language and prosody."

Sesame is developing a more natural voice model with a new approach to AI-generated voices that moves beyond the flat, robotic tones of traditional text-to-speech systems by using a more advanced model called the Conversational Speech Model (CSM). Instead of just converting text to audio, CSM considers the entire context of a conversation, including tone, rhythm, and emotional cues, making the speech sound more natural and expressive. This method tackles the common issue of AI voices sounding lifeless by allowing them to respond in a way that feels more human and genuine, closing the gap between artificial and authentic-sounding dialogue.

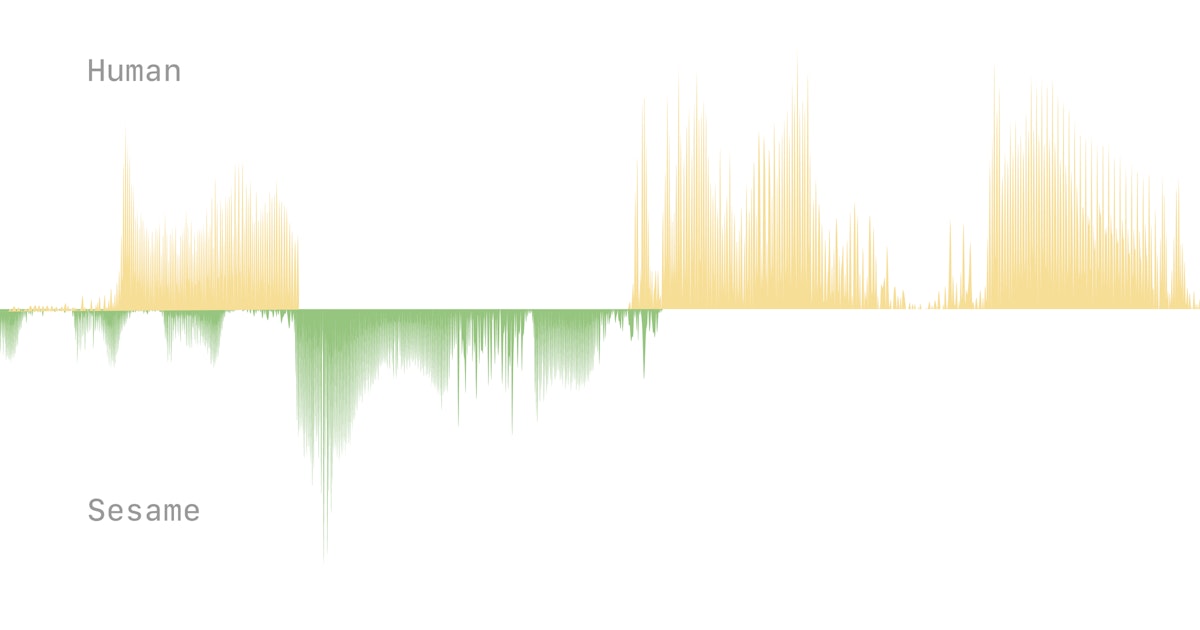

They have created a demo that allows you to converse with their voice models, which is pretty fun to play around with: